Bill Manaris is a computer science researcher, musician, and educator. As an undergraduate, he studied computer science and music at the University of New Orleans. He holds M.S. and Ph.D. degrees in computer science from the University of Louisiana. He also studied Classical and Jazz guitar.

Currently, he is the professor of Computer Science and Director of The Computing Arts program at the College of Charleston, South Carolina. His areas of interest include computer music, artificial intelligence, and human computer interaction. He wrote Making Music with Computers: Creative Programming in Python, and with his students he designs systems for computer-aided analysis, composition, and performance in art and music.

He works in computational musicology with a deep interest in Zipf’s law and the golden ratio and their application in music and art. Bill’s research has been supported by the National Science Foundation, The Louisiana Board of Regents, Google, and IBM.

Can you talk about your music and computer programming background?

Music came first for me. At age thirteen I started to play the classical guitar. I grew up in Greece so I went to the conservatory. The music that really attracted me was rock & roll: The Doors, Led Zeppelin, and Deep Purple. I ended up forming a high-school band, and I started learning Jazz guitar. At age 19, I went to New Orleans to study electrical engineering. I took my first computer programming class which was in Fortran and I was transformed. It was the first time I had ever touched a computer.

When I was in high school in the 1970s personal computers had not been invented yet. It was the first time in my life that not only did all the homework assignments that were asked of me, but also, I spent extra time tinkering, and wrote a small computer game. I decided to switch my major to computer science. As an undergraduate, I pursued music and computer science as much as I could. There was no way of combining the two yet, so I received a minor in humanities which mostly consisted of music theory courses – all of them. I went to graduate school and again there was no way to combine the two, so I focused on computer science. I lived the life of a computer scientist, and the life of a musician – two separate lives. Then, my wife and I decided to move to the College of Charleston, South Carolina, which is the thirteenth oldest university in the United States. My wife is also a computer scientist. By moving to the college, a new world opened up for me. I could finally combine the two loves of my life – music and computing.

The first ten years there, I was doing research in computational musicology. This combines artificial intelligence, music, and computer programming. My students and I tried to find ways to teach a computer to recognize beautiful music. A colleague of mine told me not to use that word as it is not measurable. He mentioned that “aesthetic pleasantness” may be a better word. For the first ten years, my students, colleagues and I taught computers how to recognize music aesthetics, pieces that humans find aesthetically pleasing. We taught computers how to identify them and we had significant success. We were able to teach neural networks three major tasks. It came down to Zipf’s Law. We developed hundreds of different ways to measure music. We taught neural networks how to tell composers apart, which is a task that humans can do well.

Think Bach versus Beethoven. We trained the computer with certain pieces, and then tested it with pieces it had not “heard” before. It was able to tell the difference with over ninety percent accuracy, which is magical. We had similar accuracy with recognizing pieces of music from different genres. Genres that are neighboring can be very confusing to humans. Sometimes it has to do with time and not music style. Again, the neural network was able to tell with over ninety percent accuracy. If you played Baroque music and jumped over to Classical and then to Romantic style, it was able to identify the genres. We included Jazz music and Country Music.

We were very lucky that we were given fourteen thousand five hundred high quality recordings of classical music from The Classical Music Archives back in 2003. They also gave us one month of their web log, which consisted of what people were listening to every month on their website. That helped a lot. The third neural network experiment was also magical. The most popular piece was Für Elise by Beethoven, by the way. We took the thousand most popular songs and the thousand least popular songs that had at least one click and we trained a neural network to tell the two groups apart, with over ninety percent accuracy. The next ten years consisted of doing what I love, creating music.

Can you talk about your book, Making Music with Computers: Creative Programming in Python?

Back in 2007, I started teaching a class called “Computers, Music and Art.” Everything started slowly unfolding then. I started looking for coding libraries for music, and I looked through all of them. The only thing that came close to what I was looking for was a library called jMusic written by two Australian guys: Andrew Brown and Andrew Sorensen. They created it to be able to perform live with computers.

That was the best library I found and I reached out to Andrew Brown and I asked him if I could take his library and wrap it with Python. He said it was o.k. A friendship developed from that and we coauthored the book. The Python Music library is an extension of jMusic but it has several different things. The coding took about five to six years to write and the book took another three years to write.

What can people learn from your book?

How to learn music, but also how to learn coding. If they already know how to code, this world opens up to them. If they have an interest in music and they didn’t have the opportunity to learn a musical instrument, the computer can be viewed as a musical instrument.

The synthesizers that we now have in our computers used to cost thousands of dollars in the 1980s. A computer is a musical instrument. Through a set of libraries people can start making music without having to spend years at a conservatory learning how to play the violin, piano, or guitar.

How did Iannis Xenakis influence you?

Xenakis was an avant-garde music composer. He was actually three things. He was a mathematician. During the Greek civil war, he went to Paris where he became an architect. He worked with Le Corbusier, who was a big name in architecture. While in Paris he also trained with the French composer Messiaen. This is how Xenakis became a composer.

Xenakis didn’t learn much music theory, such as counterpoint, but used the mathematical side of things. He influenced me indirectly. I must have been eight years old and my parents took me to a gallery. One of my parents’ friends, a visual artist / sculptor, had a show there. Gregory Semitecolo was his name. He also had a tape loop going through the room onto the ceiling. He was using a reel-to-reel tape player but there were no reels. Instead, the tape was going around the room in strange angles on pulleys. He asked me to start playing with the tape player controls and told me to do whatever I wanted.

In a way he made me act like a random function. That guy was directly influenced by Xenakis and others like him. It recently occurred to me that this was my baptism into what I do now. The universe works in mysterious ways. At the time, I had no idea what it was or why it was important. Most people know Xenakis as a music composer, but he was actually writing code to create his compositions. People who admire him musically miss the most important thing that he did his work writing Fortran code. That was twenty years before I took my first Fortran class. Xenakis was an algorithmic music composer. Of course, there are other people doing this still living like David Cope.

Can you talk about artificial intelligence in music and Monterey Mirror?

The term artificial intelligence is so hyped. Do you think there is artificial intelligence out there?

In Tesla cars there is a lot of software ranging from operating the car, controlling the windshield wipers, and detecting other cars. It has a degree of intelligence but to what degree that intelligence is I am not sure how to rate the level.

There you go. So, this is the issue. It all depends on what you mean by artificial intelligence. In essence we have these devices out there, which have been programmed by people who know how to do that, to contain subsets of human intelligence. Because these are computing machines, they can do things very quickly. They perform tasks faster than humans. These are tasks that when a human performs them, we think are intelligent. For example, the calculator on your smartphone. Or a spelling checker. I am very comfortable with that definition of AI.

Now about Monterey Mirror… yes, there is artificial intelligence within it. Computer scientists may want to use the term computer science. We use AI techniques such as genetic algorithms and neural networks. Monterey Mirror has a statistical model inside called a Markov model. Once I explain it, though, the artificial intelligence flavor will go away. It is a piece of software inside of it called a Markov model that can learn probabilities. It learns what notes follow from which notes. If you think of a chromatic scale within a Blues genre there are some notes that are not going to follow certain notes. You are going to rarely hear a half step up from the tonic. But a half-step up from the fourth is very interesting.

The model learns probabilities. How likely is it to go a half-step or a full step? If you keep teaching it by loading your notes into the computer, it will learn the probabilities of your music playing. If you listen to me playing the guitar, you can recognize me by the types of notes I am playing and where I choose to go. This is what it does and it’s not perfect. First you teach it and then you put a genetic algorithm on top of it. You can ask it to generate musical lines. The computer is fast and can do it very quickly. With Monterey Mirror we applied metrics or measurements based on Zipf’s law.

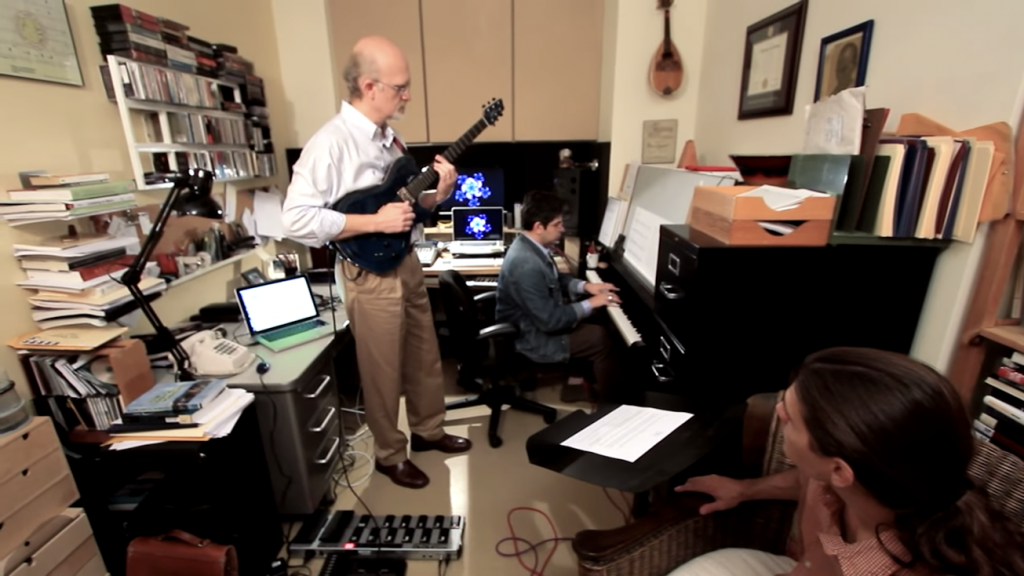

Out of a million musical lines that the Markov model generates, our code selects one, and plays it back. It picks that line based on how closely it matches probabilities in Bach’s Toccata and Fugue in D minor, I think, which is what we used as our model for aesthetically pleasing music in this case. It could be any piece or set of pieces. In the YouTube video of me playing with Monterrey Mirror I play George Gershwin’s “Summertime,” inspired more by the Miles Davis version.

I have another question for you. When Monterey Mirror responds to my guitar input of “Summertime,” who do you think the composer is? The choices are the computer, Bach because of the Toccata and Fugue model — or do you think it is me who created the algorithm?

I would say it’s all three in a way. The creation seems to be coming from all aspects so that is a tricky question.

I guess what I want to point out it is that human composers have a similar process. They have some type of mechanism. They have listened to all kinds of music before them, which influences them. Bach wrote over a thousand pieces and some of them every weekend. He wrote a total of three hundred and seventy-one chorales. He had a new one every Sunday.

He employed algorithms as he was busy and had a large family with many children. The point is that regular composers have a similar process they just don’t advertise it. Of course, Bach didn’t have a computer, he had a paper and pencil to compose and used mathematical squares. What I am trying to say is composers use algorithms.

What is Zipf’s law, the golden ration, and its applications in music?

I will start with the golden ratio which is older and Plato speaks of it. It is at least two thousand years old. It probably originated in Babylon and/or Egypt. Pythagoras brought it over. It has to do with ratios. It’s about the ratios between two quantities. If one quantity is 0.6 of the other, that ratio feels very natural or pleasing to human eyes and ears. People have applied it consciously or unconsciously, which is more interesting. Perhaps because it sounded good. We find it definitely in music, art, and architecture.

Zipf’s law is a relatively recent technique. Zipf was a linguist. He died in 1950. A way to understand Zipf’s law is to take any book written by a human. If you measure how many times certain words appear in the book you will understand. Let’s say the most popular word appears in a book one thousand times. The second most popular word is going to appear about half as many times. The third most popular word will appear one third as many times.

This goes on and on and on. We asked ourselves if we could use this in music, but what we didn’t know is that Zipf had already done it and we missed it. We started writing code and then went back to his book and found it. This was more of a validation for us. Had we known he applied the law to music, we wouldn’t have done the work at all. What we have found is that similar probabilities occur in music, in notes. We looked at the pitches of notes, durations, combination of pitch and duration, harmonic and melodic intervals, and chords, among others.

We came up with over three hundred different ways of measuring such probabilities using mathematical ideas. We found through the fluctuations and differences of probabilities we can tell composers apart. Probably because their brains or their process or the pieces they liked were different. We can tell genres apart, and if a piece of music is going to be considered pleasant or not. We can predict. We can also use Zipf’s law with genetic algorithms to create new music.

Why is algorithmic music composition performance the way of the present and future?

There are two ways to answer this. Composers, performers, and artists use technology. Whenever there is a new technology like the tape recorder or piano being invented, the latest technologies are taken by artistic people to create and express the beauty that is inside of them or the beauty that they see around them. They reformulate it in a new way. We are surrounded by computing devices. You probably have three or four of them around you. We have all these CPUs sitting idle and they are programable. Xenakis wanted people to learn how to program. He didn’t say it in those exact words, but he did.

The second way of answering is that in the previous century we had the three Rs: Reading, Writing, and Arithmetic. You needed to know all three to be educated. Now we have a fourth R which is Programming. There’s an R in there. When new skills like reading and writing first came out, society was divided into two. The ones who knew how to and the ones who didn’t. The ones who knew how to read controlled the people who didn’t. In Program or Be Programmed: Ten Commands for a Digital Age, Rushkoff makes this point. He says that we have reached this point again.

The people who know how to program are controlling the lives of the people who do not. You can think of Facebook and email. There are things that you can do and there are things that you cannot do. You gravitate towards the things that you can do. That constrains your creativity. By learning how to program you can develop things that have not been developed before. If you have a creative idea, you can use the computer to write your own algorithm. If you are a creator or a musician or a composer your new ideas can be created.

You can use the computer as a drafting tool. You don’t always have to work in code and then you can potentially grab your musical instrument. You don’t have to tell people how you did it. The sky is the limit. It is the new technique and those who know it will be much more creative than those who do not. A prediction would be that in ten to twenty years from now, every conservatory will be teaching this.

What’s next for you and what else do you want to accomplish?

I want to make more music. That’s it, short and sweet.